To summarize, web scraping is the process of browsing and gathering data automatically from web pages instead of the manual browsing made by a human, which decreases the time needed for scraping enormously. The Main Steps of Web Scraping. Getting started with Node.js web scraping is simple, and the method can be broken down into 3 main steps.

The internet has a wide variety of information for human consumption. But this data is often difficult to access programmatically if it doesn't come in the form of a dedicated REST API. With Node.js tools like jsdom, you can scrape and parse this data directly from web pages to use for your projects and applications.

Nov 24, 2012 The first step to web scraping is downloading source code from remote servers. In, “ Making HTTP Requests in Node.js,” readers learned how to download pages using the request module. Jul 24, 2020 npm is the default package management tool for Node.js. Since we’ll be using packages to simplify web scraping, npm will make the process of consuming them fast and painless. Next, go to your project’s root directory and run the following command to create a package.json file, which will contain all the details relevant to the project. Lately I'm trying to scrape Information from a website using Nodejs, the request module and cheerio. Since this site requires an authentication to view most of it's sites, I tried to login via a post request and checking if the user is logged in with the following code (I replaced the credentials with dummy data but I use real data in my actual. Take a look at our Top 7 JavaScript Web Scraping Libraries in order to gather information on other quite famous libraries. Setup for Web Scraping using Node JS: Before anything, or implementing any kind of code or something, you absolutely need to have the Node.js and npm installed and downloaded on your system which is the basic requirement.

Let's use the example of needing MIDI data to train a neural network that can generate classic Nintendo-sounding music. In order to do this, we'll need a set of MIDI music from old Nintendo games. Using jsdom we can scrape this data from the Video Game Music Archive.

Getting started and setting up dependencies

Before moving on, you will need to make sure you have an up to date version of Node.js and npm installed.

Navigate to the directory where you want this code to live and run the following command in your terminal to create a package for this project:

The --yes argument runs through all of the prompts that you would otherwise have to fill out or skip. Now we have a package.json for our app.

For making HTTP requests to get data from the web page we will use the Got library, and for parsing through the HTML we'll use Cheerio.

Run the following command in your terminal to install these libraries:

jsdom is a pure-JavaScript implementation of many web standards, making it a familiar tool to use for lots of JavaScript developers. Let's dive into how to use it.

Using Got to retrieve data to use with jsdom

First let's write some code to grab the HTML from the web page, and look at how we can start parsing through it. The following code will send a GET request to the web page we want, and will create a jsdom object with the HTML from that page, which we'll name dom:

When you pass the JSDOM constructor a string, you will get back a JSDOM object, from which you can access a number of usable properties such as window. As seen in this code, you can navigate through the HTML and retrieve DOM elements for the data you want using a query selector.

For example, querySelector('title').textContent will get you the text inside of the <title> tag on the page. If you save this code to a file named index.js and run it with the command node index.js, it will log the title of the web page to the console.

Using CSS Selectors with jsdom

If you want to get more specific in your query, there are a variety of selectors you can use to parse through the HTML. Two of the most common ones are to search for elements by class or ID. If you wanted to get a div with the ID of 'menu' you would use querySelectorAll('#menu') and if you wanted all of the header columns in the table of VGM MIDIs, you'd do querySelectorAll('td.header')

What we want on this page are the hyperlinks to all of the MIDI files we need to download. We can start by getting every link on the page using querySelectorAll('a'). Add the following to your code in index.js:

This code logs the URL of every link on the page. We're able to look through all elements from a given selector using the forEach function. Iterating through every link on the page is great, but we're going to need to get a little more specific than that if we want to download all of the MIDI files.

Filtering through HTML elements

Before writing more code to parse the content that we want, let’s first take a look at the HTML that’s rendered by the browser. Every web page is different, and sometimes getting the right data out of them requires a bit of creativity, pattern recognition, and experimentation.

Our goal is to download a bunch of MIDI files, but there are a lot of duplicate tracks on this webpage, as well as remixes of songs. We only want one of each song, and because our ultimate goal is to use this data to train a neural network to generate accurate Nintendo music, we won't want to train it on user-created remixes.

When you're writing code to parse through a web page, it's usually helpful to use the developer tools available to you in most modern browsers. If you right-click on the element you're interested in, you can inspect the HTML behind that element to get more insight.

You can write filter functions to fine-tune which data you want from your selectors. These are functions which loop through all elements for a given selector and return true or false based on whether they should be included in the set or not.

If you looked through the data that was logged in the previous step, you might have noticed that there are quite a few links on the page that have no href attribute, and therefore lead nowhere. We can be sure those are not the MIDIs we are looking for, so let's write a short function to filter those out as well as elements which do contain a href element that leads to a .mid file:

Now we have the problem of not wanting to download duplicates or user generated remixes. For this we can use regular expressions to make sure we are only getting links whose text has no parentheses, as only the duplicates and remixes contain parentheses:

Try adding these to your code in index.js by creating an array out of the collection of HTML Element Nodes that are returned from querySelectorAll and applying our filter functions to it:

Run this code again and it should only be printing .mid files, without duplicates of any particular song.

Downloading the MIDI files we want from the webpage

Now that we have working code to iterate through every MIDI file that we want, we have to write code to download all of them.

In the callback function for looping through all of the MIDI links, add this code to stream the MIDI download into a local file, complete with error checking:

Run this code from a directory where you want to save all of the MIDI files, and watch your terminal screen display all 2230 MIDI files that you downloaded (at the time of writing this). With that, we should be finished scraping all of the MIDI files we need.

Go through and listen to them and enjoy some Nintendo music!

The vast expanse of the World Wide Web

Now that you can programmatically grab things from web pages, you have access to a huge source of data for whatever your projects need. One thing to keep in mind is that changes to a web page’s HTML might break your code, so make sure to keep everything up to date if you're building applications on top of this. You might want to also try comparing the functionality of the jsdom library with other solutions by following tutorials for web scraping using Cheerio and headless browser scripting using Puppeteer or a similar library called Playwright.

If you're looking for something to do with the data you just grabbed from the Video Game Music Archive, you can try using Python libraries like Magenta to train a neural network with it.

I’m looking forward to seeing what you build. Feel free to reach out and share your experiences or ask any questions.

- Email: sagnew@twilio.com

- Twitter: @Sagnewshreds

- Github: Sagnew

- Twitch (streaming live code): Sagnewshreds

Web scraping node js example. In this tutorial, You will learn how to use to retrieve data from any websites or web pages using the node js and cheerio.

What is web scraping?

Web scraping is a technique used to retrieve data from websites using a script. Web scraping is the way to automate the laborious work of copying data from various websites.

Web Scraping is generally performed in the cases when the desirable websites don’t expose external API for public consumption. Some common web scraping scenarios are:

- Fetch trending posts on social media sites.

- Fetch email addresses from various websites for sales leads.

- Fetch news headlines from news websites.

For Example, if you may want to scrape medium.com blog post using the following url https://medium.com/search?q=node.js

After that, open the Inspector in chrome dev tools and see the DOM elements of it.

If you see it carefully, it has a pattern. we can scrap it using the element class names.

Web Scraping with Node js and Cheerio

Follow the below steps and retrieve or scrap blog posts data from the medium.com using node js and cheerio:

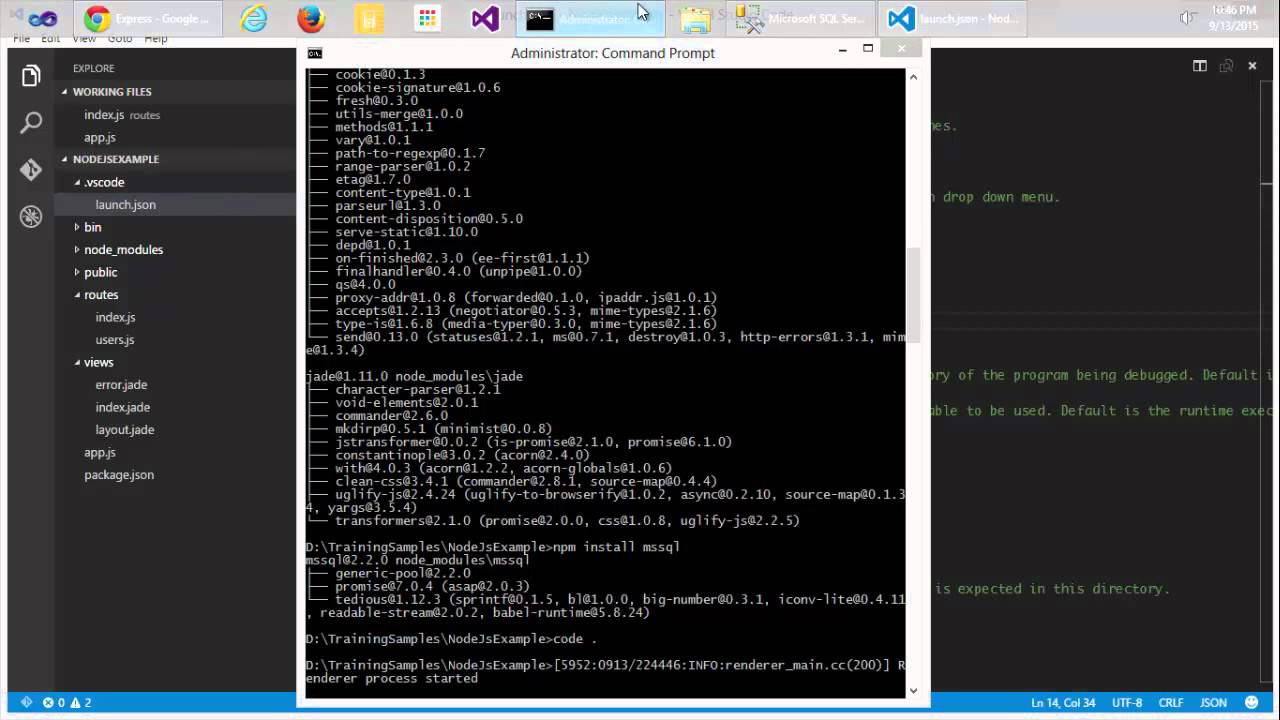

Step 1: Setup Node js Project

Let’s set up the project to scrape medium blog posts. Create a Project directory.

mkdir nodewebscraper

cd nodewebscraper

npm init --yes

Install all the dependencies mentioned above.

npm install express request cheerio express-handlebars

Step 2: Making Http Request

Firstly, making the http request to get the webpage elements:

request(`https://medium.com/search?q=${tag}`, (err, response, html) => {

//returns all elements of the webpage

})

Step 3: Extract Data From Blog Posts

Web Scraping Tool

Once you retrive all the blog posts from medium.com, you can use cheerio to scrap the data that you need:

const $ = cheerio.load(html)

This loads the data to the dollar variable. if you have used JQuery before, you know the reason why we are using $ here(Just to follow some old school naming convention).

Node.js Web Scraping Cheerio

Now, you can traverse through the DOM tree.

Since you need only the title and link from scrapped blog posts on your web page. you will get the elements in the HTML using either the class name of it or class name of the parent element.

Firstly, we need to get all the blogs DOM which has .js-block as a class name.

$('.js-block').each((i, el) => {

//This is the Class name for all blog posts DIV.

})

Most Importantly, each keyword loops through all the element which has the class name as js-block.

Next, you scrap the title and link of each blog post from medium.com.

$('.js-block').each((i, el) => {

const title = $(el)

.find('h3')

.text()

const article = $(el)

.find('.postArticle-content')

.find('a')

.attr('href')

let data = {

title,

article,

}

console.log(data)

})

This will scrap the blog posts for a given tag.

The full source code of node js web scraping:

app.js

const cheerio = require('cheerio');

const express = require('express');

const exphbs = require('express-handlebars');

const bodyParser = require('body-parser');

const request = require('request');

const app = express();

app.use(bodyParser.json());

app.use(bodyParser.urlencoded({extended : false}));

app.engine('handlebars', exphbs({ defaultLayout : 'main'}));

app.set('view engine','handlebars');

app.get('/', (req, res) => res.render('index', { layout: 'main' }));

app.get('/search',async (req,res) => {

const { tag } = req.query;

let datas = [];

request(`https://medium.com/search?q=${tag}`,(err,response,html) => {

if(response.statusCode 200){

const $ = cheerio.load(html);

$('.js-block').each((i,el) => {

const title = $(el).find('h3').text();

const article = $(el).find('.postArticle-content').find('a').attr('href');

let data = {

title,

article

}

datas.push(data);

})

}

console.log(datas);

res.render('list',{ datas })

})

})

app.listen(3000,() => {

console.log('server is running on port 3000');

})

Step 4: Create Views

Next, you need to create one folder name layouts, so go to your nodewebscrap app and find views folder and inside this folder create new folder name layouts.

Inside a layout folder, create one views file name main.handlebars and update the following code into your views/layouts/main.handlebars file:

<!DOCTYPE html>

<html>

<head>

<meta charset='UTF-8'>

<meta name='viewport'>

<meta http-equiv='X-UA-Compatible'>

<link href='https://cdnjs.cloudflare.com/ajax/libs/semantic-ui/2.4.1/semantic.min.css'>

<title>Scraper</title>

</head>

<body>

<div>

{{{body}}}

</div>

</body>

</html>

After that, create one new view file name index.handlebars outside the layouts folder.

nodewebscraper/views/index.handlebars

Update the following code into your index.handlerbars:

<form action='/search'>

<input type='text' name='tag' placeholder='Search...'>

<input type='submit' value='Search'>

</form>

After that, create one new view file name list.handlebars outside the layouts folder.

nodewebscraper/views/list.handlebars

Node.js Web Scraping Tutorial

Update the following code into your list.handlerbars:

<div>

{{#each datas}}

<a href='{{article}}'>{{title}}</a>

{{/each}}

</div>

<a href='/'>Back</a>

Step 5: Run development server

npm install

npm run dev

Important Note:

Depending on your usage of these techniques and technologies, your application could be performing illegal actions